Ethical AI in Recruiting: Balancing Innovation with Privacy and Compliance

Artificial intelligence (AI) is revolutionizing the recruitment landscape, offering tools that streamline processes, enhance candidate experiences, and improve decision-making. However, the integration of AI into hiring practices brings forth ethical challenges, particularly concerning privacy, data usage, and compliance. Balancing innovation with ethical responsibility is crucial to harness AI’s potential without compromising individual rights or organizational integrity.

The Rise of AI in Recruitment

Organizations are increasingly leveraging AI to streamline HR processes such as recruitment, performance evaluation, and employee engagement.

AI-powered tools can sift through vast amounts of applications, identify suitable candidates, and even conduct preliminary assessments, significantly reducing the time and resources spent on hiring. For instance, AI-driven chatbots can handle initial candidate interactions, providing instant responses and gathering essential information.

Ethical Challenges in AI-Driven Recruitment

Despite its advantages, AI in recruitment presents several ethical concerns:

- Bias and Discrimination: AI systems learn from historical data, which may contain inherent biases. If past hiring practices favored certain demographics, AI could perpetuate these biases, leading to unfair treatment of candidates from underrepresented groups. Notably, Amazon discontinued its AI hiring tool after discovering it favored male candidates over female ones, as it was trained on resumes submitted over a ten-year period that were predominantly from men.

- Privacy Concerns: AI tools often require access to personal data, raising concerns about how this information is collected, stored, and used. The use of AI in analyzing candidates’ social media profiles or other personal information without explicit consent can infringe on privacy rights. For example, AI’s ability to analyze vast amounts of data can lead to the unintended exposure of sensitive information, as highlighted by concerns over tools like Microsoft’s Copilot.

- Lack of Transparency: Many AI algorithms operate as “black boxes,” making it challenging to understand how decisions are made. This opacity can lead to mistrust among candidates and make it difficult for organizations to identify and correct potential biases. The emerging field of Explainable AI (XAI) aims to address this by making AI decision-making more transparent, but its application in recruitment is still developing.

- Compliance with Regulations: The regulatory landscape for AI is evolving. Organizations must ensure that their AI-driven recruitment practices comply with laws such as the General Data Protection Regulation (GDPR) in Europe or the Equal Employment Opportunity Commission (EEOC) guidelines in the United States. Non-compliance can result in legal repercussions and damage to the company’s reputation. For instance, the EU AI Act proposes stringent regulations on AI applications, emphasizing the need for compliance to avoid hefty fines.

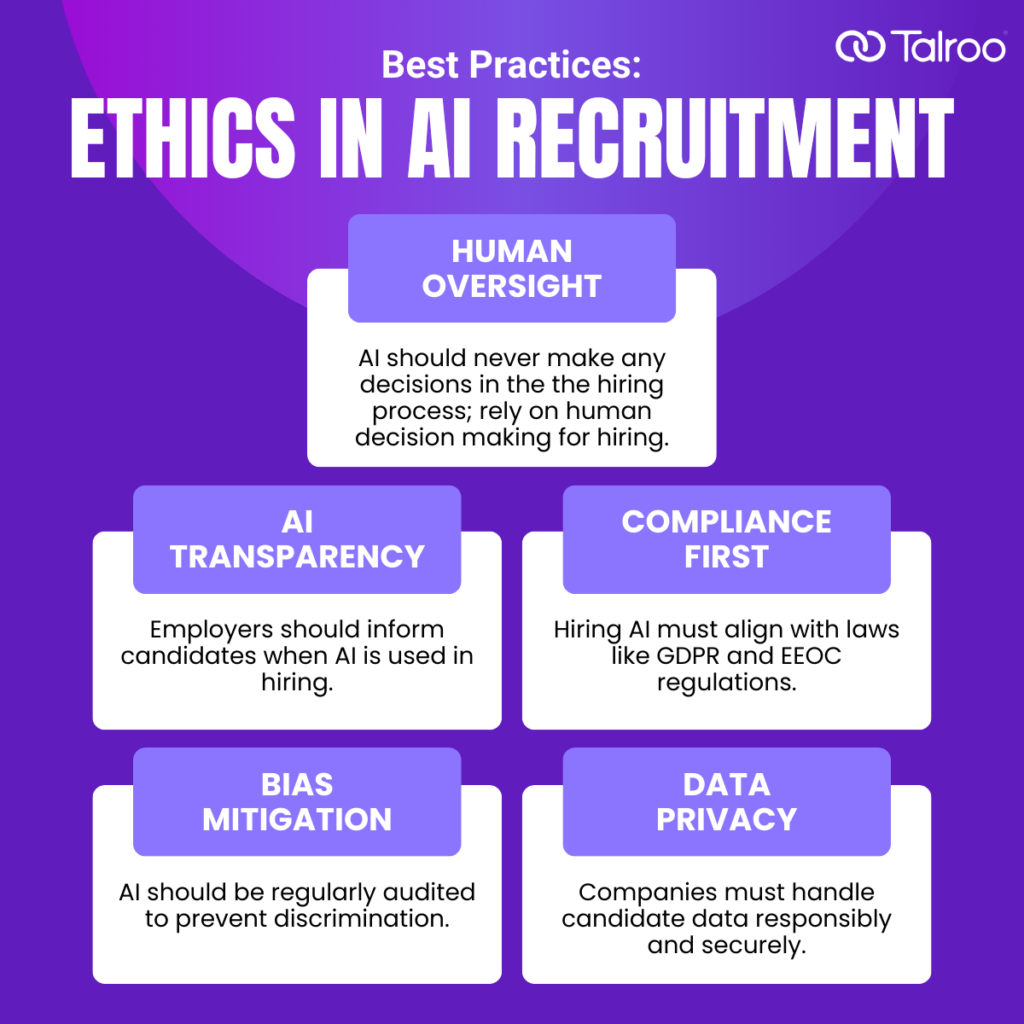

Strategies for Ethical AI Implementation in Recruitment

To address these challenges, organizations should consider the following strategies:

- Ensure Data Quality and Diversity: Training AI systems on diverse and representative datasets can help mitigate biases. Regular audits of these datasets are essential to identify and correct any imbalances. Collaborating with diverse teams in AI development can further help in recognizing and addressing potential biases.

- Maintain Transparency and Explainability: Implementing AI systems that provide clear explanations for their decisions can build trust with candidates and stakeholders. Organizations should prioritize transparency in their AI tools and consider adopting Explainable AI (XAI) methodologies to make decision-making processes more understandable.

- Obtain Informed Consent: Candidates should be informed about the use of AI in the recruitment process and how their data will be utilized. Obtaining explicit consent not only respects privacy but also aligns with legal requirements. Clear communication about data usage can enhance trust and candidate experience.

- Regular Audits and Monitoring: Continuous evaluation of AI systems is crucial to ensure they function as intended. Regular audits can help detect and rectify biases or inaccuracies, ensuring fairness and compliance. Establishing a dedicated team to oversee AI ethics and compliance can further strengthen this process.

- Human Oversight: While AI can assist in decision-making, human judgment should remain integral to the recruitment process. Human oversight ensures that contextual nuances are considered, and ethical standards are upheld. Balancing AI efficiency with human empathy can lead to more equitable hiring practices.

- Stay Updated with Regulations: As AI regulations evolve, organizations must stay informed and adapt their practices accordingly. Engaging legal experts to navigate the complex regulatory environment can help maintain compliance and avoid potential pitfalls. Proactively adjusting to new laws demonstrates a commitment to ethical practices.

The Future of Ethical AI in Recruitment

As AI technology continues to evolve, its role in recruitment will likely expand. Embracing ethical AI is not just a regulatory necessity—it’s a strategic advantage. Companies that prioritize fairness, transparency, and compliance will build stronger employer brands, foster greater trust among candidates, and ultimately attract top talent. To prepare for the future, organizations should consider the following ethical AI best practices:

- Stay proactive in AI governance by continuously refining policies and practices to align with emerging regulations and ethical standards.

- Invest in AI literacy among HR teams to ensure responsible implementation and informed decision-making.

- Encourage cross-functional collaboration between HR, legal, and technology teams to create robust AI frameworks that prioritize fairness and accountability.

- Leverage AI responsibly by using it as a tool to enhance—rather than replace—human judgment in hiring.

The ethical use of AI in recruitment isn’t just about compliance; it’s about shaping a hiring process that is fair, inclusive, and effective. By striking the right balance between innovation and responsibility, organizations can unlock AI’s full potential while safeguarding candidate rights and upholding ethical hiring practices.